| The institution identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides evidence of improvement based on analysis of the results in each of the following areas (Institutional Effectiveness):

|

Judgment of Compliance

|

Narrative

Sam Houston State University is committed to providing programs and services that set high standards and that assess their achievement in order to continually improve. Academic, administrative, and service units at Sam Houston State University engage in uniform processes of assessment planning, documentation, implementation, and tracking. This process requires that each unit define its goals within the larger framework of the university and other units, and its objectives within the framework of these goals. Then it requires that the unit identify the desired learning or performance outcomes of its objectives. These outcomes, which must be stated in measurable terms, are assessed as to whether desired targets are met. Subsequently, each unit is required to interpret the results of its assessments and to subsequently develop action plans to improve future performance. The University provides guidance and coaching in assessment processes through the Office of Institutional Research and Assessment, which houses a director for Institutional Research and Assessment and a Coordinator of Assessment.

As part of a long-range effort to reinforce evidence-based decision-making with a culture of on-going assessment, each unit at Sam Houston State University assesses itself annually and reports the process and results using the University’s Online Assessment Tracking Database (OATDB). OATDB is a web-based tool designed to capture, manage, archive, and track academic, administrative and service unit assessment information for a variety of assessment applications (i.e., regional and discipline-specific accreditation, program reviews, internal monitoring, annual reporting, and program improvement.) The OATDB was created in-house and was released for University-wide use in spring of 2006. The OATDB was created to serve three primary purposes: (1) to provide a uniform format for units to summarize their assessment efforts, (2) to provide an accessible venue allowing administrators to monitor assessment efforts within their purview, and (3) to encourage the sharing of best practices by making all assessment efforts public to the entire University community.

Target-setting, self-monitoring, outcome reporting, and institution-wide administrative assessment are not confined to the OATDB. All five University vice presidents develop performance outcome targets and gather performance outcome data for their administrative units. The Office of Institutional Research and Assessment (IRA) collects and compiles these annual performance targets and outcome updates into standardized President’s Performance Indicator Reports delivered to the University Chief Executive Officer in winter, summer and fiscal-year end [1] [2].

3.3.1.1 Educational programs, to include student learning outcomes.

All of the academic degree and certification programs at Sam Houston State University annually set objectives and assess whether or not the specific program has achieved those objectives. The results obtained from the assessment process are used to seek program improvement. The principal mode of assessment is through the setting of program specific goals, objectives, indicators, and criteria. The corresponding collection and analysis of data yield findings and set the stage for actions. The process is summarized and monitored through the University developed Online Assessment Tracking Database (OATDB). The results of the OATDB are supplemented with course evaluations, using a nationally-normed instrument developed by the IDEA Center, housed at Kansas State University. The assessment of the general education component is addressed in the narrative for Comprehensive Standard 3.5.1. The pursuit and attainment of discipline-specific accreditation, when available, is an external measure of program effectiveness. The assessment process at Sam Houston State University is constantly evolving. The assessment process itself is assessed and improved. Assessment practices are reinforced through universal the use of the SHSU Online Assessment Tracking Database.

Program Evaluation and OATDB

All educational degree or certificate programs develop assessment plans and track the implementation of these assessment plans using the University’s OATDB. OATDB requires each assessment unit to identify goals, objectives, indicators, and criteria. In addition, OATDB requires inputting of findings and actions once data are assessed. The OATDB User’s Guide provides working definitions [3]. Each objective must be categorized as either a learning outcome or a performance objective. Assessments of degree programs primarily utilize learning outcomes.

Each academic program at the University identifies goals that are supportive of the goals of the department and/or college in which it is administratively organized. Each academic program identifies student learning outcome objectives that are mapped to its program goals. For each learning outcome, measurable indicators are developed and measurement instruments and assessment methods are identified. Each program assesses whether or not it has achieved its targeted outcomes. After evaluation of findings, the unit makes plans and takes appropriate actions. The OATDB provides a summary report for each unit’s assessment efforts for a specific academic year. Examples of summary reports are provided in the support documentation [4] [5] [6] [7] [8] [9] [10] [11] [12].

The Office of Institutional Research and Assessment serves as a resource for academic units in the development of assessment efforts. Each unit is responsible for developing assessment efforts appropriate for its degree programs. In the assessment of student learning outcomes,

• Multiple inputs are encouraged;

• Measuring evidence of student performance is promoted over using student perception measures;

• The use of class-embedded assessment is encouraged, particularly use of projects, products and cumulative-learning tests from a program’s key courses;

• Group development of rubrics and scoring guides is encouraged for common use across a department; and

• Standardization of outcome assessment is encouraged through benchmarking, use of pertinent professional standards, use of applicable national or regional testing and sharing of rubrics and scoring guides within disciplines across institutions.

Examples of Closing the Loop

Examples of how assessment has led to changes in academic degree programs include the altering of the curriculum in accounting, family and consumer sciences, and developmental reading.

• Based on a review of CPA exam pass rates, and the highest level of education of exam candidates from SHSU versus other universities, the Department of Accounting determined that SHSU accounting graduates were at a disadvantage because of a lack of a master’s degree in accounting. Accordingly, the department planned, designed, sought approval for, and was granted permission to begin offering the MS in Accounting starting in fall 2008. The Department of Accounting streamlined the undergraduate accounting degree by eliminating undergraduate accounting elective courses. With the addition of new content and rigor, these courses were included in the graduate curriculum. The changes resulted in an undergraduate degree that prepares the student for entry-level accounting employment (where the CPA license is not necessary) and lays the foundation for continued study at the graduate level. The graduate degree provides students the additional training and knowledge necessary to seek the CPA license [6].

• The Department of Family and Consumer Sciences analyzed their curriculum [13] as part of their application for accreditation with the Council for Interior Design Accreditation (CIDA), received feedback that their existing senior level design studio was too ambitious in content. As a result the department added two junior-level studio courses with strong residential emphasis to meet this content need . With these courses in place, the senior-level studio courses could focus more fully on commercial design [14].

• The Department of Reading offered two developmental classes for students entering college without a demonstrated competence in reading. Reading 031, a three-credit hour developmental class, and Reading 011, a one-credit hour developmental lab for students who were close to the cut-off point for demonstrating competence were offered. In examining the success rates of students subsequent to completion of their developmental class, it was determined that students taking the one-hour lab were not as successful as students completing the three-hour course. Thus, they opted to delete the one-hour lab as an alternate route to remediate reading deficiencies [15].

Course Evaluations

The University’s Faculty Evaluation System (FES) demands that teaching effectiveness be evaluated [16]. Each fall and spring semester, the teaching performance of each faculty member, regardless of their status in the University, and each teaching assistant who is the instructor of record is evaluated. In 2005, the University moved from using an in-house developed instrument to the nationally-normed IDEA Center’s evaluation instrument [17]. The course evaluations allow students the opportunity to provide feedback to instructors regarding attainment of course objectives [18]. The course evaluations ask students to rate the efficacy of the instructor and course materials according to a five-point scale. All completed forms are sent to the IDEA Center to be scored. Summary sheets for each class, as well as composite reports, are returned to the University several weeks after the semester for distribution to individual faculty, department/school chairs, and deans. The reports provide comparison against the institutional average, the national average of all users of the IDEA system, and disciplinary averages. The IDEA-Center produces an Institutional Summary for Sam Houston State University yielding a comparison of the University’s overall teaching effectiveness to all institutions using the IDEA Center instrument. Sam Houston State University’s teaching effectiveness appears to be superior to that in the comparison group and the University’s teaching effectiveness is unusually high as evidenced in Section II of the Institutional Summary [19] [20] [21].

Program Accreditation

Another indication of program effectiveness is the degree to which programs seek and receive accreditation from discipline-specific professional accrediting agencies. Sam Houston State University encourages all academic programs to seek appropriate accreditation, when available.

The College of Business Administration’s undergraduate and graduate degree programs are accredited by AACSB International, The Association to Advance Collegiate Schools of Business [22] [23]. The College of Education is accredited by the National Council for Accreditation of Teacher Education (NCATE) [24] [25]. As one of only thirteen universities in Texas with accreditation from NCATE, the educator preparation programs meet the accreditation standards necessary to ensure high quality graduates. The American Chemical Society recognizes the Department of Chemistry as having adequate faculty, facilities, library, curriculum, and research for training professional chemists [26]. The Sam Houston State University undergraduate computer science degree program is accredited by the Computing Accreditation Commission of the Accreditation Board for Engineering and Technology (ABET) [27] [28]. The Masters Program for Licensed Professional Counselors is accredited by the Council of Accreditation of Counseling and Related Educational Programs (CACREP) [29] [30]. The Didactic Program in Dietetics (DPD) at the undergraduate level and the Dietetic Internship (DI) Program at the graduate level in the Department of Family and Consumer Sciences are currently granted accreditation by the Commission on Accreditation for Dietetics Education (CADE) of the American Dietetic Association (ADA) [31] [32] [33]. The Bachelor of Music curricula of the School of Music are accredited by the National Association of Schools of Music [34] [35]. The Doctoral Program degree in Clinical Psychology is accredited by the American Psychological Association (APA) [36] [37]. The Graduate Program in School Psychology is approved by the National Association of School Psychologists to ensure high quality school psychology training and services [38] [39].

3.3.1.2 Administrative support services

All administrative units at Sam Houston State University annually set objectives and assess whether or not the unit has achieved those objectives. The results obtained from the assessment process are used to seek improvement. The principal mode of assessment is through the setting of area-specific goals, objectives, indicators, and criteria. The corresponding collection and analysis of data yield findings and set the stage for actions. The process is summarized and monitored through the University-developed Online Assessment Tracking Database (OATDB). In addition to the OATDB, performance indicators, accountability measures, and internal surveys are used to assess effectiveness and serve as the basis for administrative decisions.

Administrative Effectiveness and OATDB

All administrative units develop assessment plans and track the implementation of these assessment plans using the University’s OATDB. OATDB requires each administrative unit to identify goals, objectives, indicators, and criteria. In addition, OATDB requires inputting of findings and actions once data are assessed. Each objective must be categorized as either a learning outcome or a performance objective. Assessments of administrative units primarily utilize performance measures.

Each administrative unit at the University identifies goals that are supportive of the goals of the department and/or college/division in which it resides. The outcome objectives are mapped to respective goals. For each objective, measurable indicators are developed and measurement instruments and assessment methods are identified. Each administrative unit assesses whether or not it has achieved its targeted outcomes. After evaluation of findings, the unit makes plans and takes appropriate actions. The OATDB provides a summary report for each unit’s assessment efforts for a specific academic year. Examples of summary reports are provided in the support documentation [40] [41] [42] [43] [44] [45].

Accountability Measures

In addition to OATDB goals and objectives, administrative units also respond to performance indicators submitted to the president of the University and state-mandated accountability measures. Each divisional vice president submits specific goals that deal with the effectiveness of the respective division. These goals are operationalized and presented in the form of performance indicators [1] [2]. The data are presented in longitudinal format to allow the president and other key administrators to track progress and identify trends. In addition to the presidential performance indicators, the University also has to respond to state-mandated performance measures. These measures relate to the State of Texas’ initiative for “Closing the Gaps.” This initiative examines four areas: participation [46], success [47], excellence [48], and research [49]. These performance indicators and accountability measures impact administrative support, educational support, and research.

Examples of Closing the Loop

• The State of Texas’ “Closing the Gaps” initiative has placed increased emphasis on the improvement of retention rates. The University’s retention rate for the entering class of 2002 was 63%. Recognizing the need to improve this rate, the University instituted a number of interventions to address this problem area. A mentoring and advising center, the SAM Center, was created. The SAM Center was charged with developing programs to increase retention. To fund this initiative, the University added a $50 advisement center fee. In addition to the SAM Center, a Reading Center and a Mathematics Lab were created similar to the Writing Center. Learning communities, both residential and non-residential were added. These efforts have succeeded in raising the retention rates. The retention rates for the entering classes of 2005 and 2006 were 72% and 70%, respectively [50].

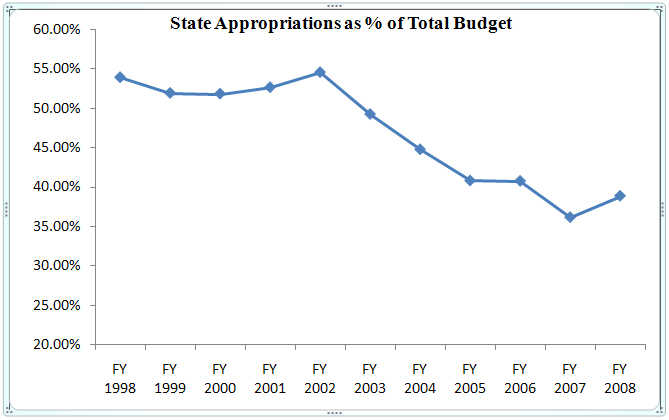

• The University initiated its capital campaign, partially based on dwindling state support. The University’s support from state funds has been dwindling over the years. As the table below indicates, the State of Texas currently provides 39% of the Sam Houston State University budget. This represents a 15 percentage point drop from the funding rate of Fiscal Year 1998.

Source: THECB Accountability Measures

In response to the loss of state support, the University opted to initiate its first capital campaign in the spring of 2006 [51]. Under the guidance of University Advancement, 40 million dollars have been pledged toward the University’s goal of 50 million dollars.

• The Office of Financial Aid falls under the purview of the Division of Enrollment Management. In response to student complaints of inefficiencies and poor response times, the Vice President for Enrollment Management decided to reorganize the division. The first action was to hire an assistant vice president with primary responsibility for the Office of Financial Aid [52]. Since the biggest complaints dealt with response times for processing requests, the Office opted to hire additional staff counselors, a senior analyst, an analyst, and a senior counselor. Deficiencies in office technology were also addressed. An after-hours telephone answering system was added. Software was obtained to allow for “E-signatures” on financial aid documents. The Office is making arrangements to move the financial aid module from SCT Plus to Banner, an enterprise resource planning solution.

3.3.1.3 Educational support services

Sam Houston State University has established a number of educational support units on campus. These units include the SAM Center (Student Advising and Mentoring Center), the Writing Center, the Reading Center, the Mathematics Lab, First-Year Experience program (FYE), and Honors Program . All educational support units at Sam Houston State University annually set objectives and assess whether or not the unit has achieved those objectives. The goals and objectives of these units often address deficiencies that are recognized in the assessment of educational programs and administrative units. For example, low 4-year graduation rates in the presidential performance indicators may lead to new goals, objectives, or initiatives in the First-Year Experience program and for the SAM Center. The principal mode of assessment is through the setting of program specific goals, objectives, indicators, and criteria. The corresponding collection and analysis of data yield findings and set the stage for actions. The process is summarized and monitored through the Online Assessment Tracking Database (OATDB) [53] [54] [55] [56] [57] [58]. In addition to the OATDB, performance indicators, accountability measures, internal surveys, and data on student usage of educational services are used to assess effectiveness.

Educational Support Effectiveness and OATDB

All educational support units develop assessment plans and track the implementation of these assessment plans using the University’s OATDB. The OATDB requires each unit to identify goals, objectives, indicators, and criteria. In addition, OATDB requires inputting of findings and actions once data are assessed. Each objective must be categorized as either a learning outcome or a performance objective. Assessments of educational support units utilize educational performance measures, administrative performance measures, and data on student usage and student satisfaction with services.

Each educational support unit at the University identifies goals that are supportive of the goals of the department and/or college/division in which it resides. Each educational support unit identifies outcome objectives that are mapped to its goals. For each objective, measurable indicators are developed and measurement instruments and assessment methods are identified. Each educational support unit assesses whether or not it has achieved its targeted outcomes. After evaluation of findings, the unit makes plans and takes appropriate actions. The OATDB provides a summary report for each unit’s assessment efforts for a specific academic year. Examples of summary reports are provided in the support documentation [59] [60] [15].

Examples of Closing the Loop

• In response to low retention rates, the SAM Center initiated the First Alert program [52] [61]. This program allows faculty to refer students who appear to be having difficulty in the professor’s course. Once referred, the SAM Center proactively calls the student for an interview and suggests interventions to the student. Analysis of the program revealed that the program success was positively correlated with early intervention [62].

• Students may satisfy Honors Program criteria by enrolling in honors courses or choosing to contract a regular course for honors credit. The Honors program noted that less than half of the students opting for an honors contract were completing the contract. By developing an honors contract monitoring program, over two-thirds of the students completed their contract obligations.

• The Reading Center provides two types of developmental courses for entering students who have not demonstrated reading competence. Students approaching the cut-off for reading competence were allowed to enroll in a one-semester credit lab as opposed to the traditional three-credit developmental class. Because fewer than 50% of students in the one-credit lab program were successful, it was determined that they still needed an intensive reading and thinking strategies course in order to be proficient and successful readers in their academic courses at the university level. Thus, the one-hour lab was eliminated and all students who fail to demonstrate competence are required to enroll in the three-credit developmental class [15].

3.3.1.4 - Research within its educational mission, if appropriate.

The mission statement for Sam Houston State University directly addresses the research mission of the University: “Sam Houston State University is a multicultural institution whose mission is to provide excellence by continually improving quality education, scholarship, and service to its students and to appropriate regional, state, national, and international constituencies.” The University’s goals specifically call for the University to

• Provide the necessary library and other facilities to support quality instruction, research, and public service;

• Provide an educational environment that encourages systematic inquiry and research.

Sam Houston State University is not a research-intensive institution. It does, however, expect its faculty to maintain an active research agenda. As such, the University does have in place a limited number of administrative units designed to support the research efforts of the institution: The Office of Research and Special Programs, Texas Research Institute for Environmental Studies (TRIES), and the Office of Contracts and Grants. As with other units, all of these research units set objectives and assess whether or not the unit has achieved those objectives. The results obtained from the assessment process are used to seek improvement. The process is summarized and monitored through the Online Assessment Tracking Database (OATDB). In addition to the OATDB, performance indicators, accountability measures, and internal surveys are used to assess effectiveness and serve as the basis for administrative decisions. The OATDB provides a summary report for each unit’s assessment efforts for a specific academic year. Examples of summary reports are provided in the support documentation [63] [64] [65] [66].

Accountability Measures

In addition to OATDB goals and objectives, administrative units also respond to performance indicators submitted to the president of the University and state-mandated accountability measures. Each divisional vice president submits specific goals that deal with the effectiveness of the respective division. The presidential performance indicators relative to research are summarized below:

| Research | FY 05 |

FY 06 |

FY 07 |

FY 08 Goals |

FY 08 YTD-Jan |

| Number of external grants/contracts | 49 |

55 |

70 |

60 |

33 |

| $ of external grants/contracts | $7,783,912 |

$7,435,140 |

$11,073,388 |

$7,900,000 |

$5,930,627 |

| Number of external proposals submitted | 97 |

75 |

148 |

124 |

60 |

FY 05 |

FY 06 |

FY 07 |

FY 08 Goals |

FY 08 YTD-Jan |

|

| Number of faculty publications | 519 |

719 |

807 |

840 |

N/A |

| Number of faculty presentations | 945 |

926 |

1102 |

1150 |

N/A |

Examples of Closing the Loop

• Each year the Office of Research and Sponsored Programs offers enhancement grants. The primary emphasis of the Enhancement Grant for Research (EGR) is to support research activities that strengthen faculty efforts to obtain external grants. In response to complaints from the Faculty Senate that the review process was biased toward the natural sciences, the Vice President for Research and Sponsored Programs agreed to form a research council, with representation from multiple disciplines, to examine and evaluate requests for internal funding [67] [68]. Under the new guidelines, the Office of Research and Special Programs (ORSP) and the Faculty Research Council (FRC) jointly oversee the selection process of this program [69].

• The Office of Research and Special Programs (ORSP) tries to facilitate and encourage faculty to seek and earn external grants. To assist the faculty, ORSP offered a series of grant-writing workshops. Feedback from faculty suggested that grant writing workshops did not attract many faculty except for those focusing on budget development. ORSP decided to reduce the number of workshops [65].

• Faculty and students planning to conduct research on human subjects must seek approval from the Institutional Research Board (IRB). The purpose of the IRB is to protect the rights of research participants. In the past, this was a lengthy process involving the submission of a hard copy proposal and subsequent review by the IRB and appropriate deans. Researchers complained that the process was cumbersome and time intensive. In some situations, proposals were ignored or temporarily misplaced. The Research Council, which oversees the IRB process, recommended that given the lack of personnel, software be secured to automate the process. With the approval of the deans, money was allocated to buy a software program from InfoEd [70]. The system will be implemented in academic year 2008-2009 [71].

3.3.1.5 -Community/public service within its educational mission, if appropriate

The mission statement for Sam Houston State University directly addresses the service mission of the University: “Sam Houston State University is a multicultural institution whose mission is to provide excellence by continually improving quality education, scholarship, and service to its students and to appropriate regional, state, national, and international constituencies.” In support of this mission statement, Sam Houston does provide community service to constituents both locally and across the state. The University’s primary service functions are training for correctional and police officers, collaborating with local small business owners (both existing and prospective), and maintaining a state historical museum.

As such, the University does have in place a limited number of administrative units designed to support these service functions. Similar to other units on campus, these units express objectives, specify outcomes, and assess the level of attainment. The results obtained from the assessment process are used to seek improvement. The process is summarized and monitored through the University-developed Online Assessment Tracking Database (OATDB). In addition to the OATDB, performance indicators, accountability measures, and internal surveys are used to assess effectiveness and serve as the basis for administrative decisions. The OATDB provides a summary report for each unit’s assessment efforts for a specific academic year. Examples of summary reports are provided in the support documentation [72] [73] [74] [75] [76] [77].

Accountability Measures

In addition to OATDB goals and objectives, administrative units also respond to performance indicators submitted to the president of the University and state-mandated accountability measures. Each divisional vice president submits specific goals that deal with the effectiveness of the respective division. The presidential performance indicators relative to service are summarized below:

| Museum | FY 05 |

FY 06 |

FY 07 |

FY 08 Goals |

FY 08 YTD-Jan |

| Visitors On-Site | 46,708 | 42,966 | 47,625 | 50,000 | 9,895 |

| Off-Site Program Attendance | 39,942 | 38,601 | 4,926 | 5,000 | 5,436 |

| School Tours | 78 | 66 | 70 | 78 | 17 |

| School Tours Attendance | 5,837 | 8,048 | 6,231 | 6,500 | 1,077 |

| Walker Education Center Events | 98 | 95 | 116 | 120 | 37 |

| Walker Education Center Event Attendance | 7,765 | 6,819 | 6,999 | 7,250 | 3,222 |

| Gift Shop Net Profit | $5,871 | $8,332 | $9,430 | $10,000 | $1,374 |

| Web Site Hits | 38,669 | 45,746 | 39,361 | 45,000 | 10,105 |

The commitment to service can also be found in some of the initiatives established in the Division of Academic Affairs. For example, Sam Houston State University has opted to participate in the American Democracy Project. This is a joint project between the New York Times and the American Association of State Colleges and Universities (AASCU). The function of the project is to instill a sense of civic responsibility in students and to increase their civic engagement.

Examples of Closing the Loop

• During the 2006-2007 academic year, the Small Business Development Center (SBDC) did not meet its goal of setting up 57 new businesses. In examining the data, they discovered flaws in the way in which they monitored clients. THE SBDC decided to change the procedures for monitoring their clients so as to have a better sense as to how many followed through and created a new business [74].

• In response to the massive public needs after the Katrina and Rita hurricanes, first responders training was desperately needed. In response to requests from the Department of Homeland Security, the Law Enforcement Management Institute of Texas (LEMIT) in collaboration with Louisiana State University National Center for Biomedical Research and Training (NCBRT)/Academy of Counter Terrorist Education (ACE), developed and hosted a two-part training program to the CAMEO (Computer-Aided Management of Emergency Operations) Suite. CAMEO is a public domain collection of software applications developed by the Environmental Protection Agency (EPA), NOAA, the US Bureau of Census and the United States Geological Services (USGS) to assist first responders and emergency planners [75].

. In 2004 the Provost became interested in the American Democracy Project, sponsored by the New York Times and the American Association of State Colleges and Universities (AASCU). In 2004 a general inquiry was sent out to faculty to determine interest in the project. Interested faculty were provided with a book describing civic engagement. Faculty volunteered to participate a steering committee was formed. Subsequently, Dr. Joyce McCauley was selected as chair of the committee. In fall 2004 and spring 2005, the committee planned and executed a number of civic engagement activities, including a community volunteer fair, faculty workshops on service learning, the co-curricular transcripts, Scouts’ Day, and voter registration campaign [78]. To further strengthen these initiatives the Provost provides travel money for faculty to attend national workshops dealing with civic engagement.

Supporting Documentation